Designing Safeguards: New York’s AI Rules as Model or Warning

New York’s posture on AI governance is not a single policy; it’s a curated stack of guardrails intended to ground rapid deployment with visible accountability. The state’s approach is not merely regulatory theater; it’s a deliberate architecture designed to channel risk, incentivize disclosure, and preserve a healthy market for experimentation. The core question — whether these safeguards become a scalable model or a cautionary tale for a federalism still learning to ride this new horse — sits at the center of a fintech-level balance sheet wringing its hands over sovereign control and private risk-taking.

Anchor and inference

This governance design begins with an anchor: transparency obligations around model use in consumer-facing AI applications. Firms must disclose when an AI system is used for decision-making that affects a consumer, and they must provide a plain-language explanation of the logic, data inputs, and limitations. The motive is twofold: to empower consumers with intelligible explanations and to create an audit trail that regulators can follow when things go wrong. The effect is a predictable baseline that reduces information asymmetry between builders, buyers, and overseers.

The anchoring reinforces the investor lens: disclosure reduces risk uncertainty, and predictability improves capital allocation. If New York’s disclosures become a de facto standard, startups can design to a known regulatory ceiling rather than a moving target. But the same clarity could also nudge players toward “safe-by-default” products that avoid high-risk features, potentially slowing breakthroughs that hinge on riskier experimentation.

Narrowing the scope without suffocating curiosity

The safeguards do not merely require what a model is; they require how it is used. The regime prioritizes human-in-the-loop verification for high-stakes decisions, such as financial services, health, and housing. This requirement threads a public-interest needle: ensuring that automation augments rather than substitutes critical human judgment in consequential domains. It’s a nuanced stance that recognizes automation’s productivity gains while insisting on human override in decisions with meaningful real-world impact.

Critics argue such constraints could become a nontariff barrier to entry for nimble startups. Others celebrate the predictability they bring to a field notorious for exponential escalation of capability with little notice. The tension is not mere philosophy; it translates into capital allocation: funds may flow toward domains where governance is transparent and enforceable, while high-risk, high-reward experiments seek refuges outside regulated jurisdictions. In other words, the policy design reshapes where money goes, not just how machines behave.

Cross-border cascades and the "nanny-state" critique

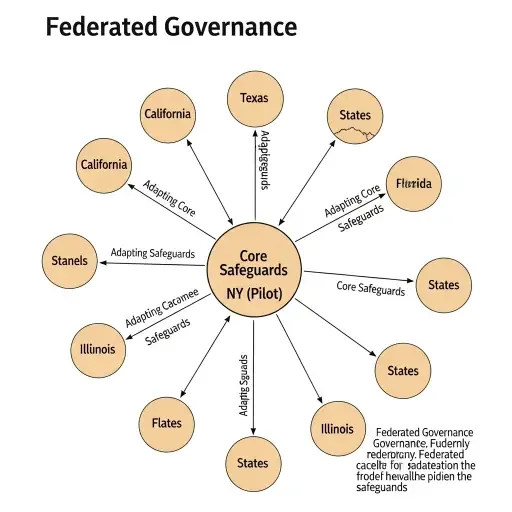

A federation-wide question lurks beneath the New York debate: will states converge on a shared guardrail template, or will a patchwork of jurisdictional rules create a costly labyrinth? The risk is twofold. First, a disjointed landscape could slow national-scale AI adoption, amplifying compliance costs for multi-state players and deterring cross-border experimentation. Second, a moral hazard arises when tighter state controls push innovation toward jurisdictions with looser oversight, resulting in regulatory arbitrage rather than a coherent national standard.

Proponents of the New York approach argue that a localized proof-of-concept can scale: robust, enforceable safeguards that do not require federal overreach to establish baseline consumer protections, data rights, and accountability. Opponents warn that what begins as protective may morph into a chilling effect, a “nanny-state” label that stings not only enthusiasts but the very enterprises those rules aim to shield. The truth likely lies in the middle: a federation where states serve as laboratories of governance, yet with a federal spine—a shared core of safety standards, interoperability protocols, and enforcement reciprocity.

Economic implications and the governance premium

From an investor's vantage, the policy design adds a governance premium: predictable risk signals, auditable decision trails, and explicit human oversight in high-stakes sectors. It’s a premium that can fund better-quality data, more robust model governance, and stronger incident response. Yet there’s also a cost: compliance overhead, potential delays in deployment, and the possibility that risk-aware but capital-light entrants are edged out by incumbents with compliance muscle. The question is whether the premium pays off through long-run resilience and consumer trust, or if the friction erodes competitiveness in edge cases that test the limits of current regulatory imagination.

![]()

Discipline as inspiration, not extinguishment

New York's safeguards are a disciplined invitation to treat governance as a product feature — a design choice that shapes not only what gets built, but how it lives in the market. If the federation learns to treat guardrails as architecture rather than hindrance, the U.S. could emerge with a dual advantage: rapid AI deployment in clearly acceptable uses, coupled with a robust public-oversight culture that makes breakthroughs durable and trusted.

But if the guardrails become a static barrier, or if states sprint ahead with incompatible rules, the nation risks a governance lag that undermines confidence and investment. The corrective is not centralized power but a collaborative architecture: interoperable standards, shared risk dashboards, and a federal-accented enforcement framework that respects state experiments while preserving national liquidity of opportunity.

Recursion and recovery: the memory of a model that can be trusted

The final takeaway is a recursive principle: guardrails are not walls; they are memory taps. They reveal, in real-time, what the market can bear, what the public will tolerate, and where the line between protection and progress should move next. New York’s approach, rightly deployed, offers a living blueprint for how to scale governance without throttling imagination. Writ large, it could become a federation-wide rhythm — a way to harness the power of rapid machine learning while keeping the human contract intact.

In sum, the New York model is a test of governance design at scale: a blueprint that may illuminate a national path or warn of a cautionary drift toward fragmentation. The outcome will depend on how the state pairs clarity with flexibility, enforcement with experimentation, and protection with opportunity. For investors, policymakers, and builders alike, the next chapters will reveal whether this is a lighthouse or a mirror — reflecting our ambitions back at us as we navigate an era of intelligent machines.

Sources

Analysis of New York State AI safeguards, industry responses, and federalism considerations drawn from public policy releases, regulatory text, and market commentary.