The headline was simple—growth softened. But beneath that sentence live three distinct signals investors often conflate: ARR quality, product mix, and guidance framing. Datadog’s numbers showed decelerating top‑line growth; its commentary, however, emphasized higher‑value telemetry and new pricing primitives tied to AI observability. Those two facts pull in opposite directions for valuation, but they are not contradictory.

Reading the quarter requires parsing what is cyclical—renewal timing, macro budget freezes—from what is structural: instrumentation for AI workflows.

The arithmetic tells the story. Management reported slowing new ARR but higher dollar retention in particular suites—APM‑adjacent and newer model‑centric services. Product release notes and marketing have shifted from “infrastructure health” language to “model reliability,” including widgets for feature‑store observability and latency heatmaps specifically tuned to embedding and transformer workloads. That shift matters because AI workloads are both more telemetry‑hungry and more predictable in spend patterns once instrumented: model training and inference pipelines generate continuous, high‑cardinality signals that platform vendors can meter.

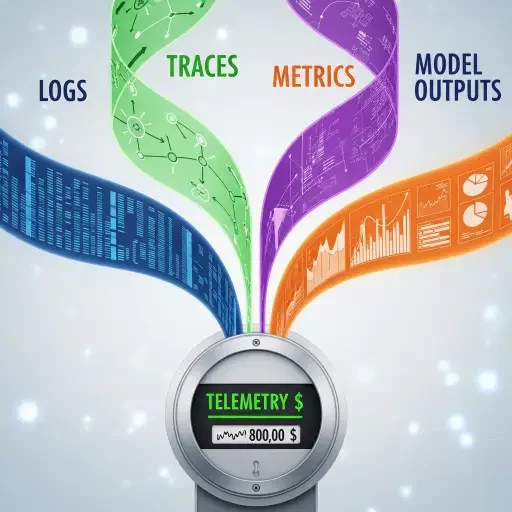

Why does predictability matter? Because ARR that is sticky and metered around model consumption is easier to monetize with tiered or usage‑based pricing. Datadog’s recent introduction of model‑aware pricing buckets and custom metrics bundles is an architectural nudge: it aligns their product incentives with customers’ incentive to instrument models comprehensively. In short, Datadog is making it cheaper to send more telemetry and easier to attribute that telemetry to billable units.

This is a platform play. Platforms win when they become the default place for signals that downstream buyers—SREs, ML engineers, compliance teams—cannot easily reconstruct elsewhere. The binding constraint here is technical: enterprises that standardize on telemetry pipelines reduce integration cost and governance risk. Datadog’s ecosystem integrations—from feature stores to managed inference endpoints—shorten the path between model observability and operational SLAs. When the unit economics of instrumenting models favor centralized telemetry, Datadog captures a growing slice of per‑model economics. The product moves convert brittle, ad‑hoc monitoring spend into repeatable, high‑margin platform revenue.

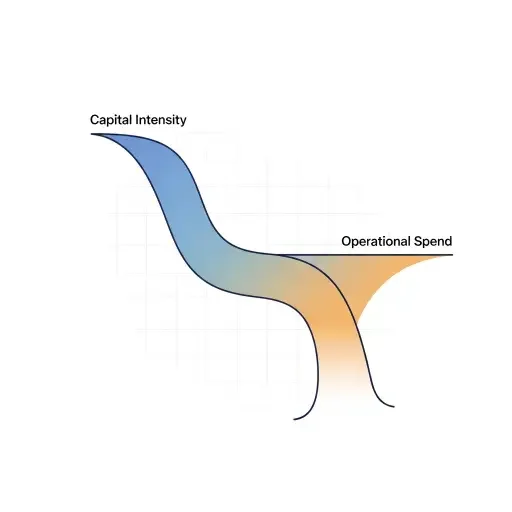

Short‑term volatility is real. Street expectations were set on prior multi‑quarter expansion rates; misses produce outsized re‑rating. But volatility does not equal structural decline. Two market‑level facts cushion the thesis. First, AI adoption follows an S‑curve; early spikes in capital intensity—GPU fleets—are followed by persistent operational spend on monitoring, feature pipelines, and governance. Second, buyers who have invested in instrumentation rarely unlearn those investments; switching costs are nontrivial. That combination—longer adoption tails plus sticky switching costs—supports higher lifetime value even if near‑term bookings wobble.

If customers migrate to closed-loop platforms from cloud hyperscalers that bundle telemetry, Datadog faces compression. Hyperscalers could cross‑subsidize model telemetry as a loss leader to keep compute customers. But two countervailing realities limit that threat. First, hyperscaler telemetry is often vertically integrated and lacks the neutral, multi‑cloud observability that many regulated enterprises demand. Second, Datadog’s partner network and marketplace create a stickiness layer that is not trivial to reconstitute inside a cloud provider’s stack. The cloud bundling risk exists, but the enterprise tradeoff—neutrality, integrations, and governance—keeps Datadog in many multi‑cloud architectures.

Analysts should parse ARR subtleties. Look beyond headline growth to cohort retention and usage density per customer. If average telemetry per AI model rises, revenue per customer will drift up even at lower new‑customer velocity. Margin trajectory also matters: usage‑based pricing can scale gross margins if Datadog manages ingestion costs and moves customers toward higher‑margin services—alerts, synthetic checks, AI model‑specific analytics.

For investors, the actionable principle is threefold and minimal: measure telemetry density, not just customer count; track product mix shifts toward model‑centric suites; and watch gross margin expansion as an early fidelity signal of successful monetization. These three metrics form a lower-entropy signal set that separates noise from thesis validation.

Datadog’s quarter is a timing story with structural underpinnings. Near‑term headline volatility is the market recalibrating a platform’s growth cadence; the deeper information is that enterprises are instrumenting models, and that instrumentation is fertile ground for a neutral observability vendor. If you accept the platform power locus—technical standards and integration costs as the binding constraint—Datadog’s post‑peak optimism is not a consolation prize. It is evidence.

Imagine a supply chain of signals—logs, traces, metrics, model outputs—converging into a single billing meter. Whoever masters that meter shapes the economics of AI operations. Datadog is positioning itself to be one such meter.

Tags

Related Articles

Sources

Datadog earnings reports, guidance, and investor calls; company product announcements and technical documentation; enterprise software market analysis from industry research firms; observability and AI workload trend reports.