The Supermind Promise

The age of the supermind is not a science-fiction premise but a practical forecast for how policy decisions could be coordinated across ministries, jurisdictions, and time horizons. It’s not merely a smoother calculator; it’s a reconfiguration of deliberation itself. If designed with discipline, the supermind could flatten cross-cutting tradeoffs—climate ambition paired with fiscal restraint, energy security with industrial renewal, public health with civil liberties—by surfacing the bottlenecks, simulating outcomes, and aligning incentives in near real time. If mismanaged, it becomes a monoculture of optimization—efficient, yes, but brittle, harmful to minority voices, and exposed to cascading failures when one sub-system misreads another.

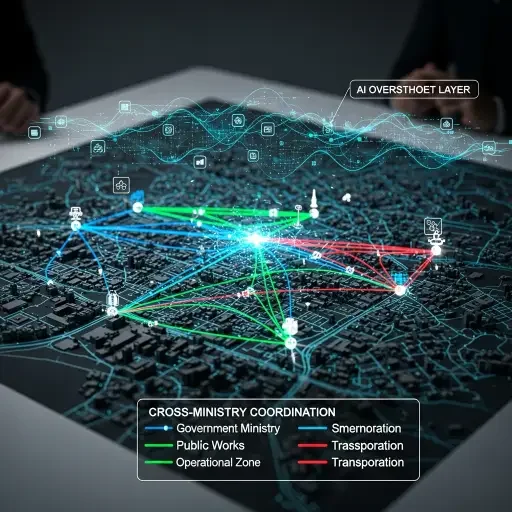

The Coordination Challenge

The promise is seductive because coordination is the nemesis of modern governance. Politicians, agencies, and stakeholders speak different dialects of risk, different time scales, and different measurement currencies. The supermind promises a shared lingua franca: a probabilistic forecast of policy outcomes, an explicit accounting of externalities, and a platform where signals from local communities flow upward and policy constraints flow downward. It is, in essence, an engineered collective intelligence designed to reduce the frictions of coordinated action.

But the risk is equally tractable: misalignment. A supermind, if left to optimize outcomes over a narrow objective or shielded from public oversight, can skew attention toward measurable metrics at the expense of unmeasured harms. It might over-emphasize near-term fiscal efficiency while suppressing long-tail ecological or social consequences. It could entrench biases in training data, privilege already powerful incumbents, or accelerate policy drift away from democratic accountability. As with any powerful technology, the system’s behavior reflects not only its code but the governance that surrounds it.

The governance architecture must be built in, not bolted on. The core tenet: alignment with public values is not a one-time calibration but an ongoing, multilayered process. The design challenge is to create an operating system for policy that is auditable, interpretable, and resilient to shocks—human-centered where it matters, technically robust where it is not.

Governance Architecture Principles

First, the alignment problem must be reframed for policy: not simply "does the AI do the thing we want?" but "does the AI enable the ecology of governance we want?" This reframing shifts the focus from raw efficiency to governance sovereignty. It requires explicit objective functions that include equity, transparency, and pluralism, plus a robust mechanism for redress when outcomes diverge from public expectations. The system should be designed with fail-fast experimentation—pilot programs with stringent sunset clauses, inclusive stakeholder engagement, and independent oversight that can halt or recalibrate the system when risks emerge.

Second, data stewardship stands as a prerequisite, not a garnish. The supermind’s recommendations depend on data—economic indicators, climate projections, health statistics, infrastructural constraints, social sentiment. Ensuring data integrity, provenance, and privacy is non-negotiable. The architecture must embed differential privacy where appropriate, robust anomaly detection, and transparent data provenance trails. The aim is to create decision environments where policymakers feel they can trust the inputs as much as the outputs.

Third, accountability must be parasitic to the design—not an afterthought. Decision logs, audit trails, and explainability features should be standard, not optional. When a policy outcome deviates from expectations, there must be an accountable path to identify root causes—whether data limitations, model drift, or misaligned reward structures. This is not merely procedural compliance; it’s a strategic instrument for learning and legitimacy.

Fourth, resilience requires diversity of approach. A single supermind across all policy domains can become a single point of failure. The governance design must preserve pluralism: multiple models with distinct objectives, governance bodies with independent authority, and procedural checks that preserve human judgment at critical pivots. The system should amplify, not replace, human deliberation—providing decision-relevant options and scenario pools for policymakers to compare.

Fifth, public engagement remains essential. The most powerful AI-enabled coordination will still operate within a political system that values legitimacy and consent. Mechanisms for public input, independent review, and open reporting can prevent technocratic capture. A culture of humility—acknowledging uncertainty, costs of error, and the limits of predictive confidence—should permeate both the design and deployment.

The Business Case and Deployment Strategy

The business case for a supermind in governance is not merely cost-savings. It is risk-weighted optimization that respects human rights, climate constraints, and market stability. Investors should view this as a new category of governance technology: platforms that reduce decision latency while expanding the bandwidth for ethical scrutiny. The ROI is not only in faster policy cycles but in elevated alignment between policy aims and lived outcomes. The best-case trajectory yields decisions that are more anticipatory, more equitable, and more robust to uncertainty.

Yet the timeline matters. Early deployments should target narrow, well-governed use cases: disaster response coordination, supply-chain resilience planning, or cross-border health surveillance. Each pilot should be accompanied by explicit sunset conditions, independent oversight, and transparent evaluation criteria. If pilots reveal persistent misalignment or exacerbated disparities, scaling must pause and recalibrate—not as a defeat, but as disciplined risk management.

The Human Warrant

Ultimately, the supermind is a tool, not a sovereign. Its legitimacy rests on transparent governance, continuous public accountability, and an architecture that preserves democratic sovereignty while expanding policy latitude. If built with humility, discipline, and inclusive oversight, it can become a powerful ally in steering collective action through climate shocks, economic shifts, and health crises. If built wrong, it accelerates the very risks we already fear: opaque choices, unequal impacts, and brittle institutions.

In the end, what matters most is not the AI’s prowess but the human warrant that guides its use. The supermind must be anchored to the public good, with constant, auditable checks that ensure alignment, not merely efficiency. The future of governance may well ride on that balance—where machine-backed coordination unlocks smarter policy, while steadfast oversight keeps the polity both free and resilient.

Sources

A review of policy research on AI coordination, governance experiments, think-tank syntheses, and public-briefing analyses; commentary from policy practitioners and technologists.