We have, at present count, eight billion units of general intelligence walking around on this planet. Each one capable of learning from minimal examples, reasoning through novel situations, creating genuinely original work, and—most remarkably—understanding context in ways that remain utterly beyond our silicon approximations. These units self-replicate, run on roughly 20 watts of power, and repair themselves while unconscious.

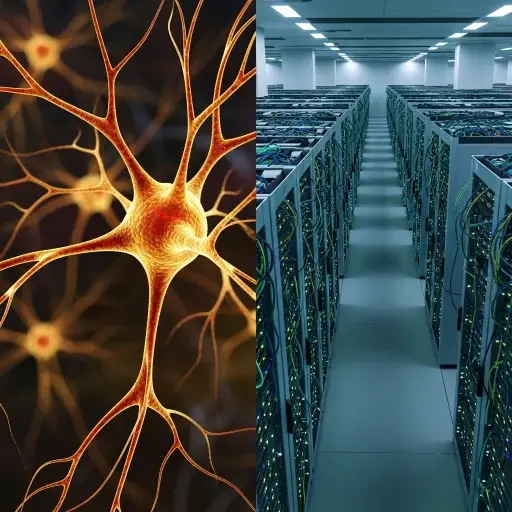

Evolution spent four billion years perfecting this technology. The human brain represents perhaps the most sophisticated information processing system in the known universe—a learning architecture so elegant it masters language through mere exposure, so robust it navigates infinite unpredictable scenarios, so creative it generates solutions that didn’t exist in its training data.

Yet the world’s wealthiest companies look at this achievement—this proof of concept—and their response is not to amplify it, support it, or even learn from it. Their response is to replace it.

The Question Nobody Dares Ask

Why do we need artificial general intelligence?

Not whether it’s technically interesting—of course it is. Not whether it’s possible—perhaps it is, given enough compute and data scraped from actual human intelligence. But why do we need it? What problem does AGI solve that investing in the eight billion general intelligences we already have wouldn’t solve better, cheaper, and faster?

The tech industry has constructed a circular logic so perfect it would be amusing if it weren’t burning through capital at civilizational scale: We need AI to replace human workers. Why? To increase productivity. For whom? For companies whose market value depends on… replacing more human workers. The snake swallows its tail and calls it disruption.

Meanwhile, the actual world—the one outside venture capital pitch decks—presents problems that are stubbornly, beautifully, irreducibly human. Educational systems that fail to reach millions of children who could flourish with proper attention. Elderly populations requiring care that no chatbot can provide. Mental health crises metastasizing in the vacuum left by atomized communities. Creative work that gives life meaning being systematically devalued because it can’t scale.

These problems share a common solution: more humans doing meaningful work. Not fewer. Not automated. More.

The Utilitarian Math Doesn’t Math

Consider the utilitarian calculus seriously for a moment. The greatest-good-for-the-greatest-number crowd should love more humans engaged in productive work. Teachers improving educational outcomes, nurses providing dignified care, craftspeople creating durable goods, artists enriching culture, counselors supporting mental health, community organizers building social capital.

Each of these roles requires precisely the capacities that make human intelligence special—empathy, contextual understanding, improvisational creativity, genuine presence, the ability to care about outcomes beyond optimization metrics.

Yet Silicon Valley has decided that the future involves fewer people doing these things. The logic is nakedly financial: human labor has friction, demands dignity, requires healthcare, forms unions, and most annoyingly, shares in value creation. Much cleaner to concentrate intelligence—and therefore economic power—in proprietary systems owned by a handful of corporations.

This is the unspoken truth beneath all the talk of “empowering” and “augmenting” human capabilities. The end goal isn’t augmentation. It’s substitution.

What We’re Actually Burning

The costs of the AGI race extend far beyond the billions in capital expenditure:

Environmental physics don’t care about your pitch deck. Training large models produces carbon emissions equivalent to hundreds of transatlantic flights. Data centers consume electricity at rates that strain regional grids. We are literally burning fossil fuels to build systems whose primary output is text that sounds plausible and images that look technically competent but semantically hollow.

Jobs aren’t just income streams. They’re dignity, purpose, social connection, skill development, and daily structure. The automation agenda—pursued with the subtlety of a hostile takeover—threatens to hollow out entire categories of meaningful work while concentrating wealth among those who own the replacement systems.

Every interaction with an LLM is an interaction not happening between humans. Every task automated is an opportunity for skill development lost. Every decision outsourced to an algorithm is an erosion of human judgment, agency, and self-trust. We’re training an entire generation to expect instant synthetic answers rather than to think, to value algorithmic speed over human reflection.

The second-order effects compound viciously: As human skills atrophy from disuse, we become more dependent on AI systems. As we become more dependent, we invest less in human development. As we invest less in humans, AI becomes comparatively more capable. The flywheel spins, and we call it progress.

The Conductor Has Left the Building

Perhaps most alarming is the absence of any coherent destination. The AGI race is a runaway train powered by venture capital, quarterly earnings pressure, and founder messiah complexes, with no one at the controls asking whether we should be on this train at all.

The people building these systems—technically brilliant, no question—often demonstrate a stunning poverty of wisdom about human nature, social systems, and what actually makes life worth living. They’ve spent their careers optimizing engagement metrics, moving fast and breaking things, treating every system as a problem awaiting an algorithmic solution.

Now they want to rebuild human intelligence itself, as if consciousness were just another engineering challenge—gradient descent at sufficient scale, add some RLHF, ship it. The hubris is breathtaking. Four billion years of evolutionary R&D, and they think they’ll crack it in a decade using techniques that didn’t exist five years ago.

They speak endlessly of “alignment”—how do we make AI do what we want?—while remaining completely unaligned with human flourishing. They pursue AGI not because civilization needs it, but because it’s technically fascinating, because it might make them unfathomably rich, because in their narrow technocratic worldview, the capability to build something constitutes sufficient justification for building it.

The Alternative We’re Not Discussing

Imagine redirecting even twenty percent of the capital flowing into AGI toward amplifying human intelligence instead:

Universal access to world-class education, personalized to individual learning patterns. Lifelong learning systems that help people adapt and grow throughout increasingly extended careers. Better tools for human collaboration and creativity that genuinely assist rather than replace. Support systems allowing people to do meaningful work sustainably, without burning out by forty. Healthcare systems that treat mental health with the same seriousness as physical health.

This isn’t naïve technophobia. It’s a recognition that the limiting factor in human civilization has never been insufficient intelligence. We have eight billion intelligent agents already, most operating far below their potential due to lack of access, opportunity, education, and resources.

The breakthrough we actually need isn’t artificial general intelligence. It’s making human intelligence more accessible, more equitable, more sustainable, and more effective at solving real problems.

But that would require tech leaders to abandon their savior complexes, their visions of remaking the world in their image. It would require admitting that four billion years of evolution might have produced something more sophisticated than they can build in a few decades of throwing matrices at matrices.

Most of all, it would require answering the question they keep evading: In a world of human unemployment, environmental crisis, and social fragmentation, why are we racing to build machines that think, rather than investing in the billions of thinkers we’re systematically wasting?

The Emperor’s New Clothes

The AGI pursuit isn’t just misguided—it’s a catastrophic misallocation of civilization-level resources during a period when we can least afford such waste. We’re treating human intelligence as obsolete technology rather than as the solution itself. We’re automating meaning out of human existence in the name of efficiency. We’re disrupting stable lives in the name of innovation. We’re concentrating power among a handful of companies in the name of progress.

The most advanced intelligence we know of isn’t arriving in the next generation of transformer models. It’s already here—billions of humans whose potential we’re systematically squandering while we chase the mirage of silicon minds.

Someone needs to say it: The emperor has no clothes.

Nature already solved general intelligence. The solution is walking around, talking, creating, caring, and asking questions like “why are we doing this?” Maybe it’s time we started listening to it.

Tags

Related Articles

Sources

Analysis based on public tech industry statements, economic data on automation, and evolutionary biology literature